During my 5+ years of building and designing internal tools and libraries for over 1,000 developers at CyberArk's platform engineering division, I've learned (often the hard way) what makes internal tools succeed or fail.

In this blog post, I'll share practical, battle-tested insights on gathering feedback, release management, effective documentation, measuring adoption, improving adoption through DevEx, and embracing an "internal open-source" product mindset.

My Journey to Internal Tools' Excellence

I work at CyberArk's platform engineering division. Over the last 5+ years, I've designed, built, or reviewed many of our internal libraries and tools. We've libraries for tenant isolation, logging, metrics, and feature flags. And tools such as AWS WAF rules, DataDog logs exporter. And automation that create new SaaS services - this automation, which I designed, was featured in an AWS case study that describes how we reduced time to production by 18 weeks per new SaaS service and saved over 1 million dollars.

In addition, I maintain a couple of open-source projects that have over 600 GitHub stars and half a million downloads:

- aws-lambda-env-modeler - Python library designed to simplify the process of managing and validating environment variables in your AWS Lambda functions

- Aws-lambda-handler-cookbook - serverless service blueprint with an AWS Lambda function and AWS CDK Python code with all the best practices and a complete CI/CD pipeline.

I made many mistakes along the way but also adapted my mindset and methods.

Let's review the lessons and tips I've gathered over the years for building tools that developers actually want to use.

Treat it as a Product, but with Internal Customers

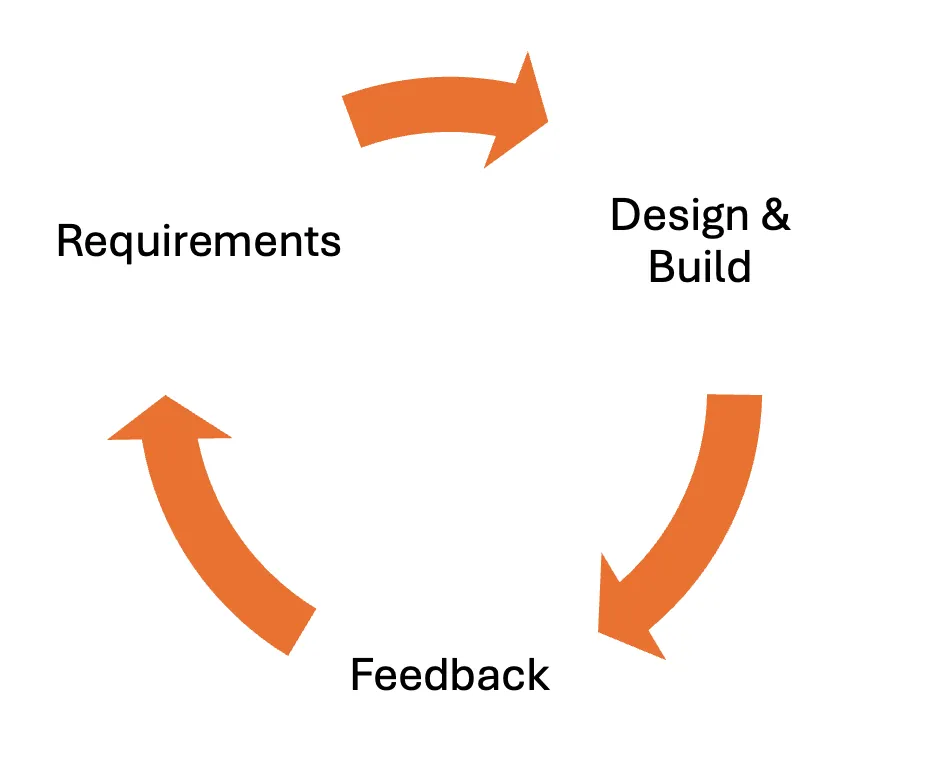

As platform engineers, we cater to the needs of internal customers, i.e., our fellow developers. However, just because they are developers like ourselves doesn't mean they shouldn't be treated as proper customers. As such, we need a product team member who leads the product development cycle, from gathering requirements to implementation and feedback.

Notice the circular shape below - an internal tool evolves and will change over time.

Gather Requirements

Your internal tools are products—and your teams are your customers. Build something they actually want.

First, we clearly define what we're going to build. We gather requirements from multiple teams—not just one—because our goal is to build tools that benefit several teams across the organization, promoting consistency and improving governance.

We want to adjust the design so it fits everybody's requirements.

I usually email all relevant system architects or contact them directly and ask them for feedback on the requirements we wrote down.

It's essential to let the developers and architects know that their voices matter so don't end being the team that forces everybody to use their tools just because management said so. Your customers need to see the value of using the new shiny tool. Otherwise, they'd build their alternatives and negate all the advantages that platform engineering brings.

Put Yourself in Their Shoes

Once requirements are set, it's time for the design and build.

This can be challenging - you don't want to build multiple flavors for different teams' needs (maintenance nightmare), and you don't want to offer too many customizations, either.

The sweet spot is building a tool that caters to the most common requirements AND offers some means of customization that every team can add on its own. Think of it as a hook that teams can build and use in your tool. For libraries, this can be a function pointer with a defined input/output interface that your tool calls, but each team provides its own function.

Dogfooding is also critical—use the tool yourself before you send it to early adopters. Try to use it as naively as possible, assuming nothing.

Lastly, ensure you get one or two early adopters to provide feedback on your design - the earlier they see it, the better!

Gather Feedback

Now that we have built the tool that should meet our customers' requirements, it is time to get their feedback on the finished product. Be open to feedback, as sometimes there can be some hidden assumptions or miscommunications.

It's ok to make changes and add new requirements; in the end, an internal tool is a living entity that evolves along the way.

Keep High Standards

A well-finished internal tool gains the respect and trust of the developers. Would you use a flaky tool? Of course not, and they would not either.

Strive for the highest quality. Everything needs to be thoroughly tested and fully automated as much as possible. Your customers shouldn't need to do anything manual that is error-prone; your tool should just work, repeatedly. However, if the odd bug arrives, it should be clear enough what happened and how to fix it.

If you fail to do so, developers will not want to use your tool and will complain, and rightly so.

It's All About the DevEx

Good developer experience leads to increased adoption and happy internal customers. DevEx is about how easy it is to use, understand, debug, and migrate to your tool.

Keep it Simple

When building the tool, make no assumptions but just one—assume the customers don't know anything about the tool, how to use it, or the technology behind it. Make it as simple as possible to use. For example, when building libraries, use simple and meaningful argument names and docstrings—also use few arguments—the simpler, the better.

Automate CI/CD Pipeline and Infrastructure

Some libraries or tools might have infrastructure dependencies. For example, a feature flag library requires AppConfig configuration deployment or a LaunchDarkly API Key stored in an SSM parameter store. Your job is to help the developers get it working as smooth as possible. You should provide, in addition to the library, CDK constructs, or Terraform templates that provision the required resources and place them in the required SSM parameter store under the correct key.

If teams are required to switch to a new tool from another, provide CI/CD automation, CLIs, and scripts that help them migrate.

Documentation

I view internal tools as internal open-source. As such, they should be documented at the highest level - at the code level (if it's a library) and at the GitHub pages level. We provide GitHub pages for every tool with the design, tips, guides, and code examples.

I highly recommend you check out my open-source's GitHub pages to get a grip on these concepts:

- https://ran-isenberg.github.io/aws-lambda-env-modeler/

- https://ran-isenberg.github.io/aws-lambda-handler-cookbook/

Release Notes

Use semantic versioning: https://semver.org/ and strive to keep breaking changes to a minimum (if at all). You can generate release notes using GitHub's generaterelease notes feature. Publish new release notes at least once a month, especially for new minor or major versions. One of the major benefits here is visibility for you and the customers. Teams can use the GitHub 'watch' feature and get notified when new release notes are published.

Code Contributions

It wouldn't be a proper open source without code contributions, right?

Platform engineering teams can become bottlenecks in some cases. Accepting code contributions releases blockage and allows teams to get the desired features without forking the entire tool.

You must define a clear contribution process and standards (documentation, tests, automation, etc.).

- Talk with code owners and explain the requirements.

- Once you get the green light, start developing.

- Once merged, the platform takes full ownership of the code. The contributor has zero responsibility from that point.

Ongoing Maintenance

Tools require maintenance over time—upgrading runtime, updating dependencies, solving bugs, and more. Tools that rot get forked fast!

You should define a monthly process for updating dependencies, a clear process for opening bugs, and an SLA for solving them; otherwise, frustration will kick in. It's all about expectations.

Other things to consider- At some point, you will need to support multiple runtime versions, for example, Python 3.12 and Python 3.13 simultaneously. You can't just release a new major version for Python 3.13 and remove support for 3.12 overnight. Your customers won't upgrade to 3.13 immediately. In addition, when you solve bugs, you will need to also release the fix for Python 3.12.

Define clear runtime deprecation dates and communicate them to your customers.

Sharing the Knowledge

It's great that you built an incredible new tool, but nobody knows about it so that nobody will use it!

Create ceremonies such as bi-weekly demos to discuss new tools or best practices and get immediate developer feedback. We've been doing it for years, and it has worked great.

In addition, you can send email notifications to team leads and architects or even create a platform day meeting once a quarter where you share all the new tools and practices.

If you have templates for new services (check out my Serverless service template), you should add the latest tools/libraries to the templates so people will notice them when they start a new service.

Measure Adoption

Platform engineering is measured by adoption. The more, the better it affects and reduces organizational waste.

Different tools will have various measuring methods, from a manual usage search in GitHub to questionnaires and feedback meetings to an automated method using AWS Service Catalog—you can read about the latter in my AWS.com article "Serverless Governance of Software Deployed with AWS Service Catalog." Without visibility, you wouldn't know how and what to improve. The questionaries are also a great way to get customer feedback and understand why they are not using some of your tools.

If you have templates for new services (check out my Serverless service template), add the latest tools/libraries to the templates to increase adoption.

Summary

In this blog post, we covered numerous ways platform engineers can build a tool that developers want to use. We covered aspects of treating an internal product with a lifecycle, ranging from requirements to design and feedback. We covered implementation aspects such as DevEx, knowledge sharing, and adoption measurement.