Agentic AI and MCP servers are rapidly becoming the hottest trends in modern software development. Every week, new tools and techniques are introduced to accelerate code writing and delivery. At its core, platform engineering helps developers move faster without sacrificing governance, security, or best practices.

But how do these emerging technologies fit into a platform engineering strategy?

In this post, I’ll show how platform teams can build the foundational building blocks—like prompt libraries, secure MCP server blueprints, and MCP data connectors—that enable safe, governed adoption across the organization. I’ll also share practical examples of how these foundations power real use cases, from code reviews to HLD generation and platform adoption (vibe platform adoption!).

Agentic AI and MCP in the Organization Landscape

Agentic AI and the Model Context Protocol (MCP) have overtaken engineering.

From "vibe coding" experiments to rapidly evolving open-source SDKs, the pace of innovation is stunning. Yet early adoption comes with real concerns, especially around security. As Allen Helton, AWS Serverless hero, pointed out in his recent post, your MCP server is cool, but out of the box, it's public, and you don't know who might be abusing it.

Ask any IT professional, and they'll tell you their worst nightmare: developers using unauthorized "open-source" MCP servers for their agentic AI needs or, even worse, building an insecure one themselves without proper governance, security, or standards.

Platform Engineering, MCP and Agentic AI

That's where platform engineering comes in. It's not about resisting the trend—it's about adopting it smartly across the organization.

And it doesn't stop with MCP. While anyone can spin up an Amazon Q or a copilot agent, the goal is to achieve deterministic outcomes that align with organizational best practices, coding conventions, and security requirements.

Platform engineering teams must lead the charge in enabling the safe, scalable use of agentic AI and MCP across their organizations - Ran Isenberg

In this post, I'll share several practical ideas and patterns that platform teams can implement—some I've seen across the industry and others I'm actively developing as part of my work in CyberArk's platform engineering division.

Don't know what MCP is? Check out this post.

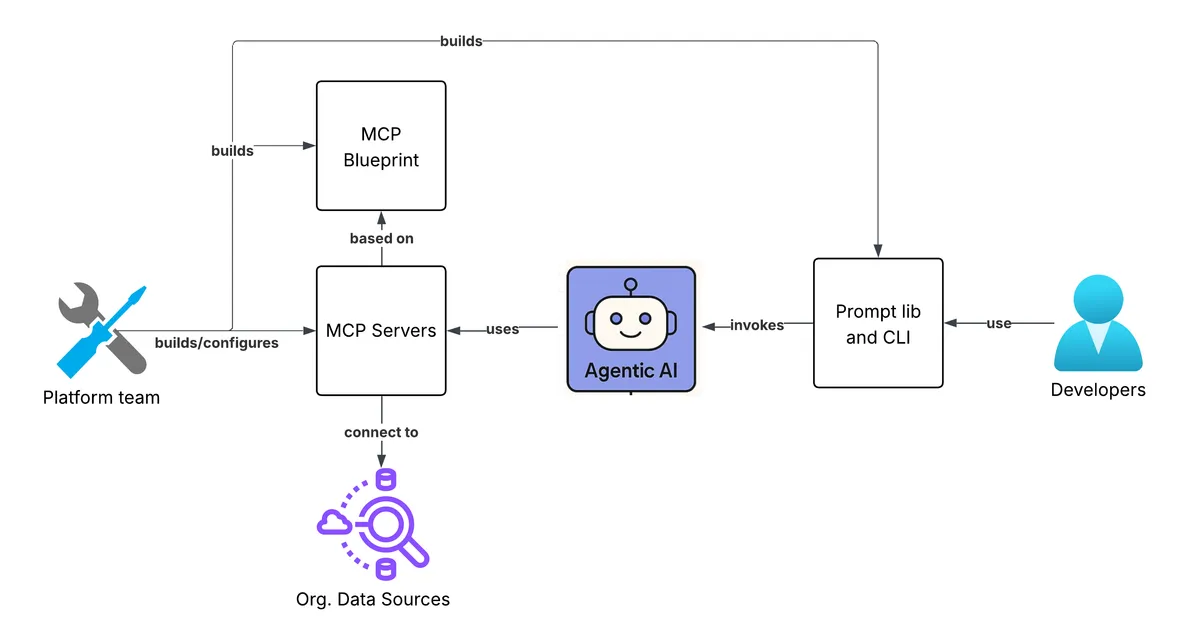

Agentic AI & MCP Platform Engineering Foundations

Let's cover the three building blocks that your platform engineering team must build for a secure and governed agentic AI & MCP adoption:

- Prompt Library & Org. CLI for Running Agents

- MCP Server Blueprint

- Connect Organizational Data via MCP Servers

I assume you can access Amazon Q, Claude, or Copilot agentic AI.

Prompt Library & Org. CLI for Running Agents

Let's start with a trend gaining traction online: developers share battle-tested prompts in their GitHub repositories. While helpful, these prompts are often opinionated and rarely align with an organization's specific coding standards or architectural conventions.

At CyberArk, we built a CLI that runs everything—linters, complexity checks, tests, you name it—configured from a centralized, versioned GitHub repository. It's kept up to date and reflects our evolving platform standards.

So why not do the same for prompts? Why not treat prompt engineering as a first-class developer tool?

By wrapping internal, battle-tested prompts in CLI commands, we can either inject them directly into Copilot extensions or other agentic workflows or copy the prompt to the clipboard (like pbcopy in Mac)—ensuring developers start with the proper context every time.

It's fast and reliable, and developers love the productivity boost.

MCP Server Blueprint

Obviously, most of the ideas I'll share here require MCP servers. Your organization will either use them from trusted sources or build them internally. Instead of having to reinvent the wheel every time, use blueprints! GitHub template repositories with all the best practices, governance, observability and security (WAF, IP restrictions, id tokens etc.) built-in. That way, your developers will just focus on writing that 'mcp.tool' business logic instead of dealing with non-business oriented issues.

I'll share my own version of a serverless MCP blueprint once i'm satisfied with the outcomes. I wrote a blog post about the current MCP on Lambda status, which is not great in my opinion. For now, you can check my other blueprints : AWS Lambda handler cookbook and my collection of awesome serverless blueprints.

Connect Organizational Data Sources via MCP Servers

The last foundation connects MCP servers to the organization's data sources, such as Jira, GitHub, Confluence, and AWS accounts. These MCPs provide organizational context to the agentic AI tools.

I prefer using well-maintained open-source MCP servers, such as GitHub's official MCP server or JIRA/Confluence"s server, and over-building them myself.

The platform team will set up the MCP servers with all the security bells and whistles. The team can connect other data sources that don't have an official MCP by building the MCP servers using the MCP blueprint.

Agentic AI & MCP & Platform Engineering Ideas

Now that we have our three foundational building blocks let’s review some ideas for combining agentic AI and MCP to increase governance and security and improve developer efficiency across the organization.

Vibe Platform Adoption

If vibe coding is a thing, surely there's a place for vibe platform adoption.

Building a platform of engineering tools, services, and SDKs is one thing, but having developers adopt the tools is another. In my blog post "Stop Building Internal Tools Nobody Wants: A Platform Engineer's Guide," I covered many tips and tricks to increase adoption.

However, no matter how amazing the UX is or how well the documentation is written, developers still need to make code changes and pay attention to detail.

Let's automate this process with our MCP & agentic AI building blocks.

I'll share an example I'm building at work - observability governance.

I want every serverless service to use the platform's correlation-id SDK in two main places:

- Service entry - parse correlation ID HTTP headers and inject them into all service logs.

- Pass the correlation ID headers over REST HTTP headers and SNS attributes to other services.

Having the correlation ID in all the service logs allows developers and SREs to understand how one customer request moved between services and identify issues faster.

The problem starts when developers forget to use the SDK, use it wrong, or don't pass the correlation ID when calling other services.

It has to be perfect. Luckily, agentic AI can help.

We will craft a prompt that leverages MCP and understands how to use the correlation-id SDK. The MCP servers that connect your confluence page or the documentation of the SDK's GitHub pages.

Next, wrap this prompt with a CLI command that users can run, inject into the agentic AI chat, and change the code.

I was able to get this working. I ran my prompt on a complex service with dozens of Lambda functions and multiple HTTP integrations.

Ten minutes later, my service was fully correlation-id proof. Agentic AI is perfect for these tedious tasks. The best part is that it is repeatable and easy!

Developers can focus on developing their business domain instead of these tedious but important tasks. However, to keep the service aligned with platform best practices, we will add these mechanisms to the code review process so they are enforced as developers add new code - which brings me to the next idea below.

Vibe Coding and Code Review Standards

Developers can now write code and tests while automatically connecting to the platform's MCP servers that apply organizational best practices, IaC best practices, pattern templates, and coding styles in real time. Agents can auto-upgrade SDKs, IaC modules (like CDK v1 to v2 which was a nightmare to do), or external libraries, and even open pull requests with migration notes and changelogs. The majority of the work is to define all these best practices in an agent friendly format. Don't know where to start? Use AWS's MCP servers and expand - see https://github.com/awslabs/mcp?tab=readme-ov-file.

For local workflows, a CLI can run code reviews on demand, using a pregenerated prompt that connect to said MCP servers.

For GitHub vibe coding, use Amazon Q or GitHub-integrated Copilot agents to review code based on your company’s standards, stored in a centralized folder (like .org-rules/ or security-guidelines/). This ensures consistent enforcement of architecture, logging, security, and observability practices—at scale.

Our correlation-id SDK will never be forgotten again!

Architecture High Level Design Reviewer & Generator Agent

I mainly focused on code and code updates, but AI can be used for architecture design, too!

Start by creating your organization's high-level design (HLD) template and outlining key architectural best practices. If you need inspiration, check out my post, Cloud Architect's High-Level Design Template, which you can use as a solid foundation.

Make sure to embed platform-specific considerations like tenant isolation, observability practices, and even cost estimation (yes, that dark art). Once defined, expose the template via an MCP server so agents can access it in a structured, reusable way.

Next, extend your platform CLI with a new HLD review command. This command should inject a prompt like:

"You are a serverless architect. Your task is to review this HLD using the company template provided via MCP. Be as specific and critical as possible."

Now, any architect—or even a developer—can trigger an HLD review using a single CLI command, whether the HLD is hosted in Confluence, Markdown, or another MCP-integrated source.

But why stop there? If the agent can review the design, it can also generate it from scratch, break it into implementation tasks, and even sync it with Jira—all by connecting the right data sources through MCP. This isn't just automation—it's accelerated architecture at scale.

From Ideation to PR

You can take everything discussed above and push it further—connecting the entire development lifecycle into a seamless, AI-powered flow. Imagine starting with product ideation, drafting a product requirements document, generating a high-level design, and ending with an auto-generated GitHub pull request—all orchestrated through interconnected MCP servers.

While this level of automation may be more practical in startup environments or for smaller-scale products, it showcases the incredible potential of agentic AI when combined with structured platform tooling.

Want to see it in action? Take a look at Jit's demo below. They connected several MCPs together, and the results are very cool!

https://drive.google.com/file/d/18kC12tWadv2pcCTLeNkg2NjTIjlN4DYq/view

Summary

Agentic AI and MCP servers are redefining how developers interact with infrastructure, tooling, and governance—but without platform engineering oversight, the results can quickly become chaotic. In this post, I laid out a structured approach for bringing order to innovation by defining three foundational building blocks: a centralized prompt library and CLI, a secure MCP server blueprint, and connectors to organizational data sources like GitHub, Confluence, and Jira.

With these building blocks in place, the post explores several practical ways platform teams can empower developers while enforcing consistency at scale. From auto-fixing correlation ID implementation across services to agent-led HLD reviews, SDK upgrades, code standards enforcement, and even generating Jira tickets from requirements—each idea demonstrates how MCP and agentic AI can be safely operationalized across large engineering organizations.